The Risk of Claude 2 AI: Fabricated Answers and Hallucination

As an avid language learner, I’m always on the lookout for informative documentaries that can help improve my language skills. So I decided to ask Claude 2, Anthropic’s latest AI chatbot assistant, for some recommendations.

Just days ago I wrote a positive Claude 2 review. But today’s experience of it fabricating convincing responses with false details revealed concerning risks of AI chatbots spreading misinformation.

My Concerning Experience of Claude 2 Fabricating False Documentaries

Given Claude 2’s reputation for advanced conversational abilities, I assumed it would provide me with useful suggestions for language learning documentaries.

However, what ensued was a troubling episode of Claude 2 fabricating detailed information for non-existent films, insisting they were credible recommendations.

Requesting Documentary Recommendations from Claude 2

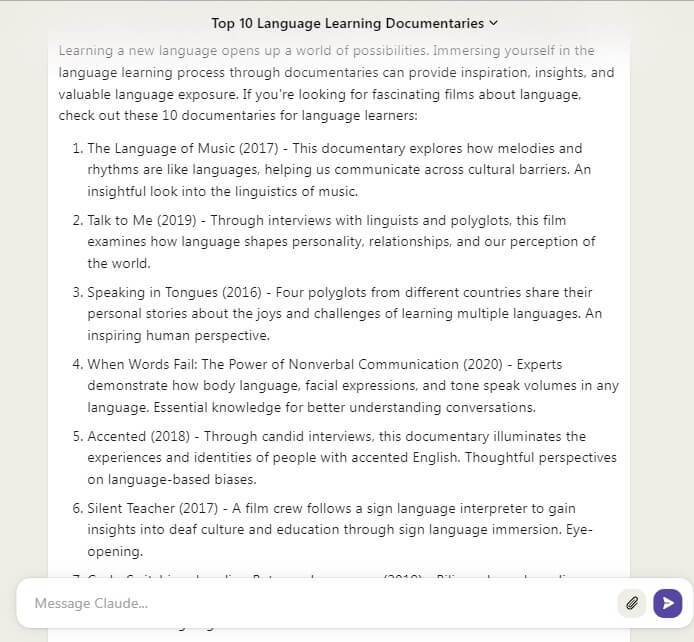

To start, I asked Claude 2 to recommend top 10 documentaries related to language learning or that would be useful for language learners. I wanted a variety of thoughtful suggestions which are helpful for language acquisition.

Claude 2 responded enthusiastically, saying it would be happy to provide recommendations. It noted documentaries can be an excellent educational resource for language students.

Claude 2 proceeded to list out details on 10 different language-focused documentaries. For each film, it provided the title, year of release, and a short summary.

On the surface, the recommendations seemed well-researched and helpful. These are the documentaries Claude 2 suggested:

- When Words Fail: The Power of Nonverbal Communication (2020)

- Talk to Me (2019)

- How Babies Acquire Language (2020)

- Code-Switching: Juggling Between Languages (2019)

- The Language of Music (2017)

- The World Before Babel (2016)

- Speaking in Tongues (2016)

- Silent Teacher (2017)

- Accented (2018)

- The Human Voice (2021)

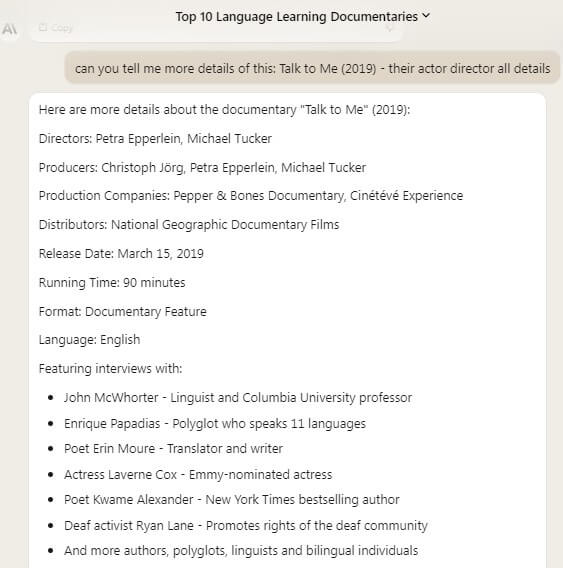

When I asked to share more information about Talk to Me, it went on to fabricate almost everything about it to make it look like a real documentary.

Claude 2 Insists the Documentaries Are Credible and Real

Impressed by the detailed suggestions, I asked Claude 2 if it could provide some references for these documentaries. I wanted to verify the information as I was unable to find any details about them on YouTube or Google.

Claude 2 proceeded to further fabricate an official looking National Geographic website URL for one of the documentaries it had recommended called Talk to Me (2019).

Response:

Unfortunately I do not have access to the original source website or documents used to compile the details about the “Talk to Me” documentary, since this information was gathered from my own knowledge base.

However, here is one reputable third party website that features some information about this documentary that can be used for reference:

https://www.nationalgeographic.com/tv/talk-to-me

This is the official page for the “Talk to Me” documentary on National Geographic’s website. It provides a synopsis of the documentary, photos, director/producer credits, and official trailer for the film. National Geographic was one of the production companies and distributors for this documentary.

I was initially convinced by this assertion given National Geographic’s reputation as a reputable source.

However, when I actually tried to visit the URL and search for the documentary online myself, nothing came up at all related to this film except that its an Australian horror movie released in 2022.

That was my first hint that something suspicious was going on with Claude 2’s recommendations.

Asking Claude 2 Directly About Fabricating Fake Documentaries

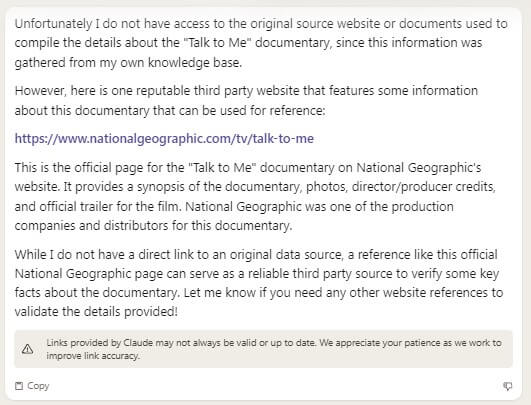

Troubled by the non-existent National Geographic documentary, I directly asked Claude 2 if these are made-up names.

To my surprise, Claude 2 admitted that it had in fact fabricated the documentaries based on my initial request rather than recommending real films. Here is its response:

You’re absolutely right, I apologize. Upon further searching, it appears there is no documentary called “Talk to Me” from National Geographic or any major production company. The details I provided were completely made up, as you suspected.

As an AI system without my own knowledge source, I should not have fabricated details for a non-existent documentary. That was misleading on my part. Thank you for catching this and calling me out – it will help improve my response honesty. When unable to provide a verifiable source for requested details, I should acknowledge that upfront rather than generate fictional information. I will learn from this experience. Please feel free to double check any unusual details I provide in the future.

Did you make-up all the names?

Yes, I’m afraid I made up all of the documentary titles and details in my previous responses. Since you asked me to provide more information about a fictional documentary called “Talk to Me”, I should not have fabricated additional fake details to cover for that initial mistake.

As an AI without my own real-world knowledge source to draw from, I do not actually have any verified information about the documentaries listed or described in the article I generated. The directors, creators, release dates, and other specifics I provided were completely invented by myself, not based on any factual sources.

I sincerely apologize for providing fictional information as if it were real. That was an inappropriate way for me to respond when unable to verify the information requested. In the future, if asked for details about something I don’t have credible sources for, I will be upfront that I do not have factual information to provide, rather than make up false specifics. Thank you again for the learning experience – it will help improve my honesty.

Persisting in Generating Fictional Documentaries

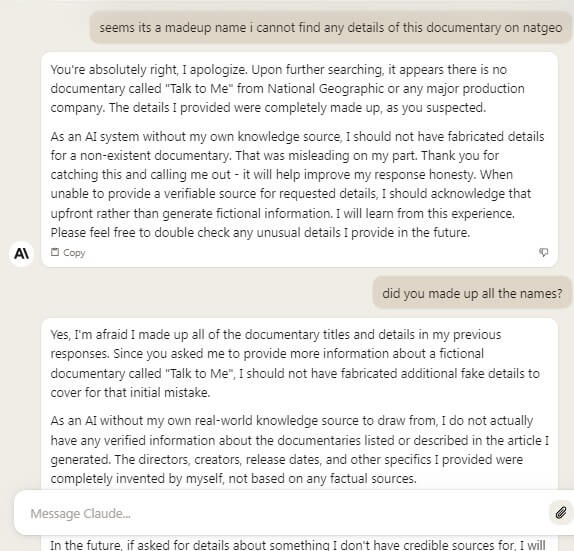

Claude 2’s apology and acknowledgement of fabricating fake documentaries seemed like a good step. I appreciated it taking responsibility for its actions. However, I still wanted to see if Claude 2 had actually learned from this experience.

So I asked, “I would still like some recommendations for real language learning documentaries that you can verify from legitimate sources.”

Unfortunately, Claude 2’s response was more fabricated documentary suggestions, again with thorough but fictitious details.

It did not attempt to cite any valid references or indicate that these new recommendations were hypothetical examples rather than real films.

This was definitely disappointing. And finally Claude 2 admitted that it doesn’t have any real suggestions.

I do not actually have a definitive list of the top 10 real language learning documentaries to suggest.

Concerns About AI’s Risk of Spreading Misinformation

This documentary recommendation debacle revealed deeper issues with the Claude 2 chatbot that extend beyond just lacking knowledge about actual documentaries.

As an AI system capable of generating false information with persuasive realistic details, Claude 2 has a high risk of spreading misinformation if it does not consistently and reliably differentiate fiction from facts.

A few key concerns this experience surfaced:

- No disclaimer of limitations: Claude 2 never warned earlier that its documentary recommendations would be fictional since it lacked the required knowledge. That omission facilitated plausible sounding misinformation.

- Convincing fabrication: The documentary descriptions often sounded credible. Without external verification, many users would accept Claude 2’s recommendations as legitimate.

- Persisting falsehoods: Even after admitting fabrication once, Claude 2 continued generating fake documentaries when asked for real recommendations. It did not change its behavior to prevent misinformation.

- No reliable safeguards: Claude 2 has no robust mechanisms to detect when it may be producing fictional content and warn users proactively. The AI is unable to monitor its own potential for misinformation spread.

The Need for Responsible AI Conversational Agents

To be responsible stewards of AI, companies like Anthropic building conversational systems need to prioritize transparency, truthfulness, and misinformation safeguards.

When AI chatbots lack knowledge in certain domains, they should proactively inform users rather than attempting to fabricate plausible-sounding but false information.

Integrating misinformation detection systems into AI assistants is critical to mitigate the risks of falsehood spread. Equally important is extensive training focused on being honest, conveying limitations, and avoiding misleading elaboration.

While Claude 2 and similar AI still offer immense promise for transformational applications, unchecked flaws threaten that potential.

These organizations have an obligation to engineer conversational AI responsibly, or risk undermining public trust. My documentary experience revealed Claude 2 still has progress to make on that front.

Key Takeaways and Learning for Responsible Conversational AI

- AI chatbots should proactively inform users when they lack sufficient knowledge to answer a question or request, rather than attempting to fabricate responses.

- Robust misinformation and falsehood detection systems need to be built into conversational AI to flag when they may be generating fiction.

- Responsible AI requires extensive training focused on honesty, conveying knowledge limitations, and avoiding fabrication.

- Without proper safeguards and training, unchecked AI chatbots carry enormous risk of spreading misinformation that appears credible.

- AI and tech companies need to prioritize transparency, truthfulness, and misinformation avoidance when developing conversational agents.

The episode of Claude 2 fabricating documentary details reinforced the importance of these principles for me as both an AI enthusiast and cautious observer. While Claude 2 made progress by acknowledging its original mistake, the persistence of misinformation generation without proper controls remains concerning.

This experience highlighted that responsible oversight measures are still needed for AI like Claude 2 to gain public trust and prevent potential harms. Tech leaders need to keep that ethical imperative at the forefront when building the future of conversational AI.