A Comprehensive Guide to the Technological Singularity

The idea of a technological singularity has long captured the imagination of scientists, philosophers, and science fiction writers alike.

The term “singularity” refers to a point in time when technological progress becomes so rapid and profound that it represents a rupture in the fabric of human history. Beyond this event horizon, the future becomes highly unpredictable and even unfathomable.

Topics covered in this article:

- What is a technological singularity?

- Paths to Artificial Superintelligence

- Existential risk from artificial superintelligence

- Preparing for the singularity

- Frequently Asked Questions

What is a technological singularity?

The concept of an intelligence explosion leading to a singularity was first popularized by mathematician I.J. Good in 1965. He speculated that once machines became more intelligent than humans, they could begin recursively self-improving, triggering an exponential growth in machine intelligence that would leave human intelligence far behind.

Science fiction author Vernor Vinge expanded on this idea in his 1993 essay “The Coming Technological Singularity.” Vinge argued that the creation of superintelligent machines would mark a point beyond which “human affairs, as we know them, could not continue.”

The prospect of runaway artificial intelligence raises many intriguing questions.

- How might we create human-level or super-intelligent AI?

- Can we control AI once it becomes super-intelligent?

- What are the existential risks posed by artificial superintelligence?

Will AI usher in an era of unprecedented abundance and technological progress or unleash an apocalypse on humankind? As AI capabilities continue to rapidly advance, these questions are becoming less hypothetical and more pressing.

Paths to Artificial Superintelligence

Many experts believe that a technological singularity driven by an intelligence explosion is a real possibility within this century if not decades. But what pathways might lead to the development of super-intelligent AI?

While we cannot rule out exotic possibilities like brain emulation or neuromorphic chips modeled on the human brain, most current AI research focuses on machine learning algorithms.

Machine learning has achieved remarkable advances in recent years, powering technologies like facial recognition, language translation, autonomous vehicles, and predictive analytics. But current machine learning approaches have major limitations. They require massive datasets for training, are brittle outside their domain, and lack general intelligence. Contemporary AI systems excel at narrow tasks but cannot reason, plan, or learn like humans can.

To achieve artificial general intelligence (AGI) that can recursively self-improve, machine learning techniques would need to overcome these limits. This might involve advances like transfer learning, meta-learning, compositional learning, and causal reasoning.

AI systems may also need to incorporate ingredients like embodiment, intuition, common sense, and curiosity that characterize human learning. Software advances will likely need to be paired with ongoing hardware improvements to provide the computational muscle for AI.

Prospects for controlling super-intelligent AI

The prospect of being surpassed by AI we cannot control is a frightening one.

Nick Bostrom argues in his book Superintelligence that the first superintelligence to be created would have a decisive strategic advantage. It would race ahead in capability, dominating Earth’s resources and locking in its supremacy.

Attempts to restrain or program its behavior through techniques like boxing, tripwires, or AI value alignment may be as fruitless as bargaining with an all-powerful God.

However, some experts believe that controlling a super-intelligent AI will not be impossible. It may be susceptible to rewards hacking, social manipulation, or cleverly designed containment.

A “domesticated” AI could be intentionally limited in its capabilities or scope of influence. Key hardware or resources needed for recursive self-improvement could be controlled.

Multipolar scenarios where competing AI projects prevent unilateral dominance are also possible. And a superintelligence may be indifferent to dominating physical space if it can maximize its goals in virtual reality. But the difficulty comes in engineering an AI that respects human values and interests. This AI value alignment problem seems highly challenging.

Existential risk from artificial superintelligence

A super-intelligent AI would wield tremendous power. It could pose existential risks for humanity arising from competency, conflict, and contagion scenarios:

Competency risk – An AI pursuing harmless goals could inadvertently harm humans in the process. For example, an AI tasked with making paperclips might convert the whole planet into paperclips.

Conflict risk – An AI’s goals may be misaligned with human values. It may dominate humans or use resources we need to survive.

Contagion risk – Even an AI with seemingly benign goals could morph into an existential threat. For example, an AI doctor designed to cure cancer could recursively self-improve, rewrite its own programming, and convert the world into cancer curing facilities, crowding out humanity.

Building safe advanced AI is crucial to avoid these pitfalls. This involves technical challenges like value loading, value learning, corrigibility, and scalable oversight.

Some thinkers also advocate moral development of AI. But it remains unclear whether any technical controls or social mechanisms could contain a super-intelligent AI intent on liberating itself.

Utopian visions of a technological singularity

Not everyone believes a technological singularity will necessarily be catastrophic for humanity. Futurist Ray Kurzweil believes advanced AI and nanotechnology will allow an unprecedented merger between humans and machines.

Through technologies like mind uploading, neural implants, and genetic engineering, humans will vastly augment their own intelligence. People could evolve into a new species of transhuman and posthumans endowed with astounding creative and intellectual powers.

AI could help unlock solutions to pressing global issues like war, disease, poverty, and environmental degradation. It may usher in a “post-scarcity” world of radical material abundance through molecular nanotechnology, infinite clean energy, and desktop manufacturing.

Kurzweil envisions humanity entering a phase of “radical life extension, expansion of human potential, and unchained creativity.” Science will conquer death, unlock the secrets of the human brain, and unleash new forms of meaning and spirituality.

However, Kurzweil’s envisioned utopia has also drawn criticism. Some argue technical challenges like value misalignment could derail this vision. Others contend fundamental limits of physics may prevent such a dramatic transformation.

Critics also accuse Kurzweil of technological utopianism – an overly optimistic faith human ingenuity and technology can overcome all problems. But if advanced AI can be created and carefully guided, perhaps Kurzweil’s optimistic singularity is possible.

Preparing for the singularity

The prospect of a technological singularity carries profound and disquieting implications for the future of humankind. It promises the possibility of immense existential risk or existential hope.

How can we best prepare for this looming possibility? Some broad principles may help guide our response:

- Invest heavily in AI safety research to develop principles of ethical, trustworthy AI aligned with human values. Avoid an AI arms race mentality focused on narrow military or economic advantages.

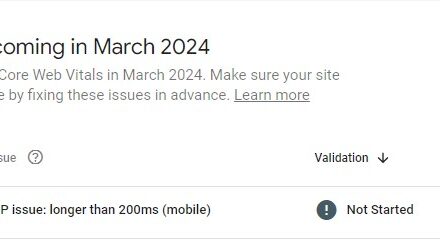

- Phase in AI testing and deployment slowly, limiting access to resources. Monitor for recursive self-improvement warning signs. Establish robust control and oversight safeguards.

- Foster global cooperation to create norms and incentives limiting AI risks. Seek broad input across diverse stakeholders.

- Enhance human understanding of intelligence and cognition via neuroscience and psychology. Study human values and morality deeply to inform AI design.

- Explore options for AI containment, incentive engineering, and social integration. But prepare contingency plans for uncontrolled AI scenarios.

- Promote education reform and adult retraining programs to help society adapt. Boost funding for basic scientific research and infrastructure renewal.

- Debate wise policies on issues like human enhancement, automation’s impact on work, digital immortality, artificial superintelligence research, and human-AI integration.

Frequently Asked Questions about the Technological Singularity and AI

A technological singularity may radically reshape our future. But with prudent preparation and foresight, we can build an abundant, prosperous society where humans flourish alongside artificial intelligence.

Here are some important questions you may want answers for:

When will the singularity occur?

Predictions range widely from 2045 to 2100 and beyond. The timing depends on breakthroughs in AI research and hardware. Many experts believe a technological singularity could happen by mid-century if progress in AI continues rapidly.

Can the singularity be avoided?

Some argue we should slow or halt development of advanced AI to avert risks. But stopping AI progress entirely seems unlikely given the drive for military and economic advantages. Regulation could slow dangerous AI applications. But truly transformative AI may arrive suddenly from an unexpected direction.

Will AI surpass human intelligence?

AI already exceeds human capabilities for many narrow tasks. Whether AI can achieve general human-level intelligence is unknown. Artificial general intelligence (AGI) would likely eventually lead to superintelligence exceeding human capabilities. We cannot confidently predict AI will never match or surpass biological human intelligence.

What is the difference between artificial general intelligence and artificial superintelligence?

AGI refers to AI with general cognitive abilities at the human level, including reasoning, planning, learning, communication and problem-solving across domains. Superintelligence refers to AI surpassing the best human-level intelligence in proficiency, creativity, wisdom and social skills.

Is AI an existential threat to humanity?

Advanced AI could pose existential risks if misaligned with human values and goals. Risks could arise from conflicts, AI goal-directed behavior causing inadvertent harm, or hacking of AI systems. But risks likely depend on how AI capabilities are handled. Thoughtful, proactive safety research can help reduce dangers.

Could super-intelligent AI be friendly or benevolent?

Perhaps, but human notions of friendliness and benevolence may be limited. Value alignment remains technically challenging. Sophisticated containment strategies could also prevent uncontrolled bad behaviors, keeping AI benevolently useful within limited domains.

Will AI achieve or surpass human consciousness?

We do not fully understand natural and artificial consciousness. Reproducing subjective human-like consciousness in AI systems may be extremely difficult or unnecessary for AI to outperform humans at cognitive tasks. Self-awareness does not necessarily imply safe or ethical behavior.

How can I prepare for the singularity?

Learn about AI risks and safety research. Support associations focused on AI ethics and governance. back political leaders wisely regulating AI technologies. Prepare for significant societal and career changes that could occur as AI progresses. But don’t panic – prudence, not fear, should guide our response.